Dear all, my paper: "Improved HTM Spatial Pooler with Homeostatic Plasticity control" got the Best Industrial Paper Award! It was a nice surprise last Sunday, getting this news from the organization committee. This happened last week at the 10th international conference for pattern recognition and models (ICPRAM 2021) in Vienna in the category machine learning theory and models. Some of you might already hear, that I've been highly researching last years in the field of AI and Computational Intelligence.

The focus of my interest is Hierarchical Temporal Memory Cortical Learning Algorithm originally described in the 2004 book On Intelligence by Jeff Hawkins with Sandra Blakeslee

Computational Intelligence meets Neurosciences

Inspired by the fantastic area of neurosciences and computational intelligence I started to research in this area with the help of my great students at the Frankfurt University of Applied Sciences. I'm teaching there Software Engineering with focus on ML and .NET Core and Cloud Computing

I have always been excited by the biological functioning of the neocortex

Jeff and his team at Numenta have started an amazing work with the idea to reverse-engineer the neocortex. The idea to reverse-engineer the brain is not new. However, I like the approach proposed by Jeff. I truly believe that the brain in its core does not implement highly sophisticated math like differential equations, back-propagation etc. If we want to be successful in this incredibly complex task, we need a different approach than most of the approaches we are using in Machine Learning today.

Over time the HTM CLA has been created. It provides a theoretical framework and helps us to better understand how the cortical algorithm inside of the brain might work. Today's implementation is created by Numenta in Python, C++ and JAVA.

This is all great, but I don't think it's very efficient to work with listed languages. Nothing against named languages, but I'm a Microsoft Regional Director and believe that many industrial.NET based applications need access to this area. So, I created the HTML CLA in C#/.NET Core and hope to integrate it soon in ML.NET.

I have been analyzing the structure of neocortex and read a lot of papers in a field of neurosciences. In the meantime, this topic has become the focus of my PhD work. This research has nothing to do with Software Engineering, but I use my skills to extremely quickly validate my ideas and create true experiments.

My motivation is to discover how the (human) intelligence works and how to put it into the code in a kind of computational intelligence? I have been learning biological facts and trying to create a computational model of some biological aspects.

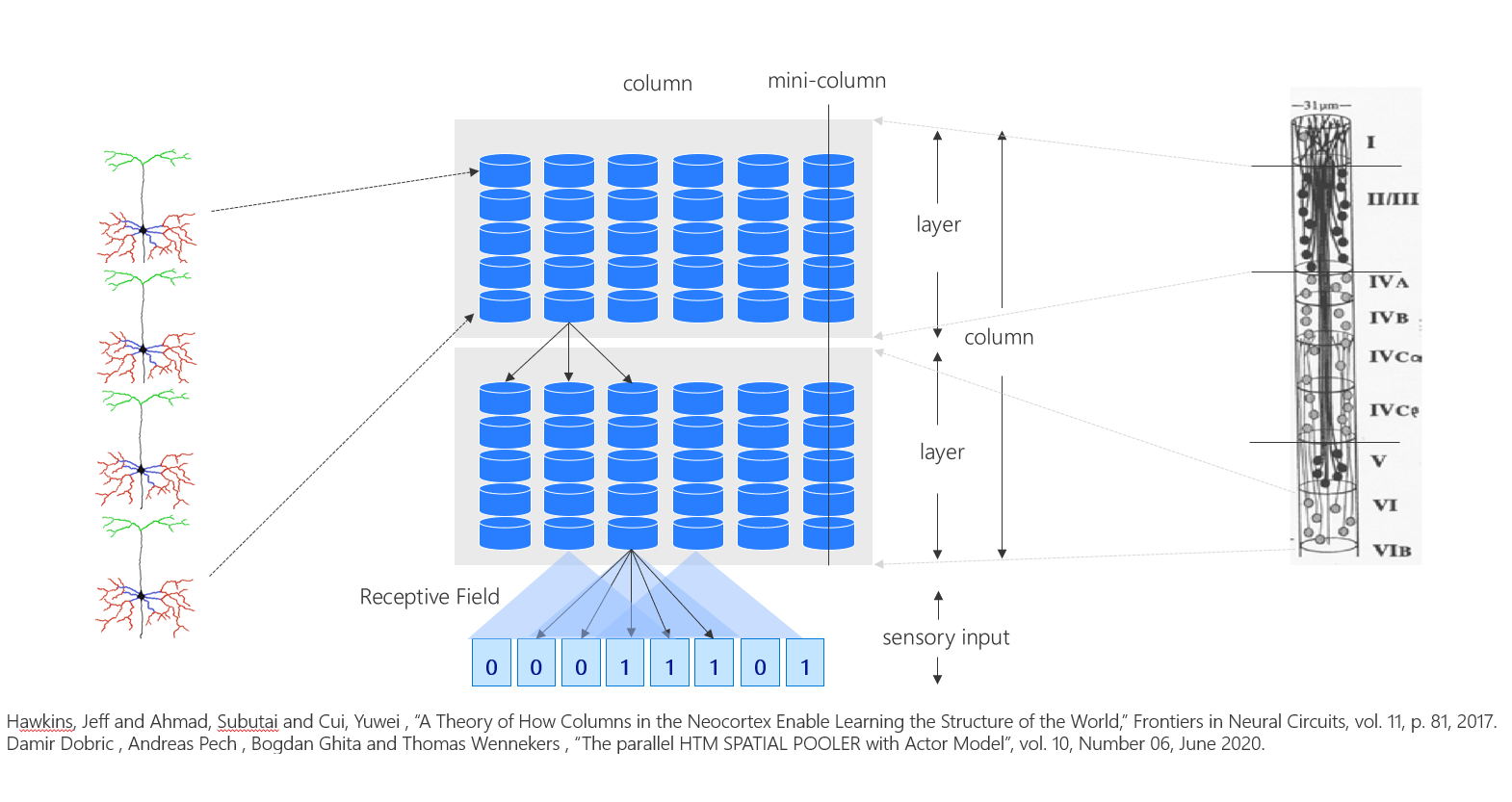

Here is an example of the neocortex tissue (right) modelled as HTM CLA in .NET Core (left).

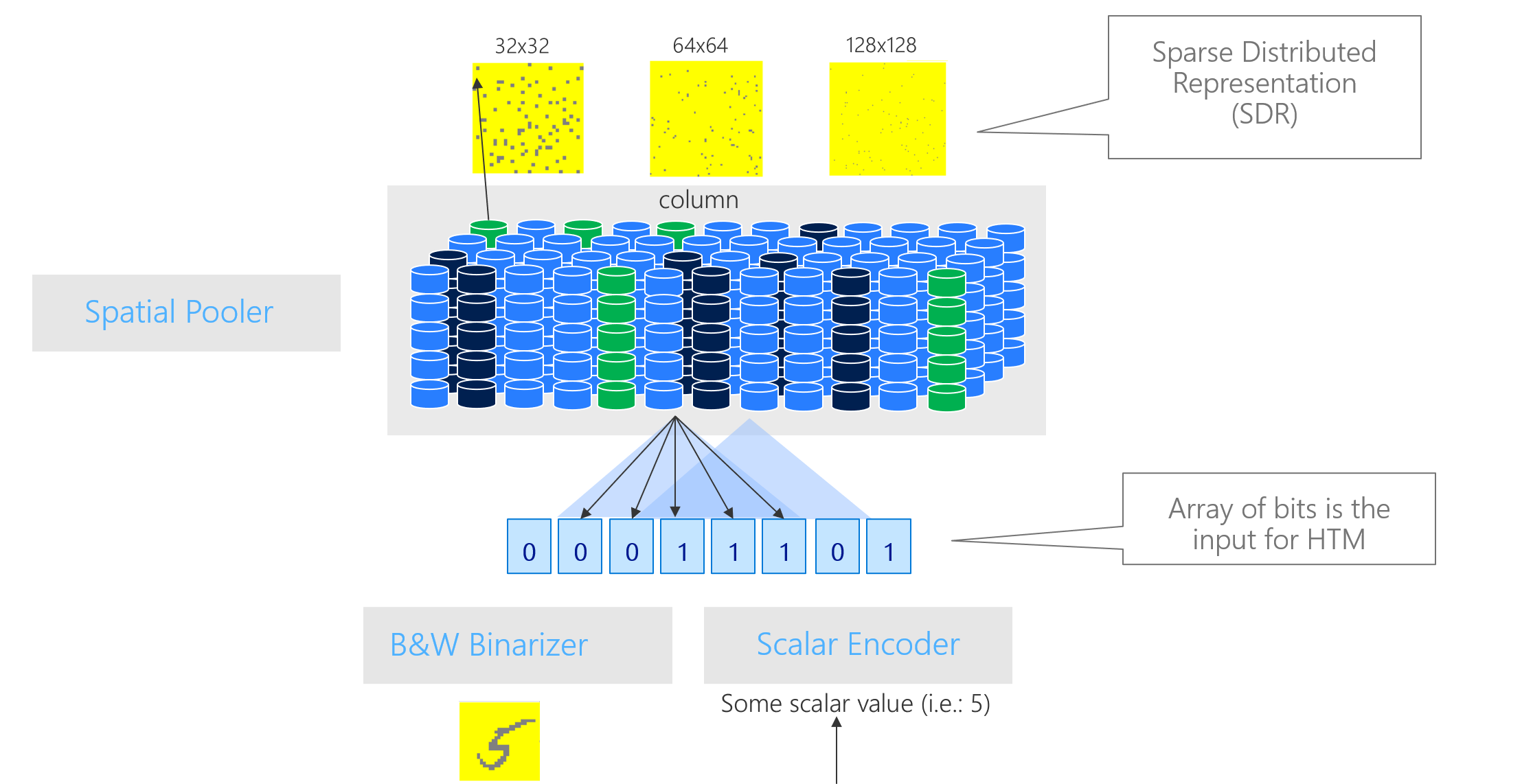

The whole concept is based on creating (let's say this way) of the slice of the neocortex into code. Then we present some (encoded) input as a bit-array to the artificial tissue that implements algorithms Spatial Pooler and Temporal Memory intending to create a sparse representation of the spatial input and a sequence of spatial inputs. These two algorithms are capable of solving many tasks. They are not developed to recognize images, but they can. They are also not developed to learn sequences, but they can. This is different from everything (nearly)in AI/ML that we know today.

Awarded paper focuses 'Homeostatic Plasticity'

I found out that some of our experiments are sometimes not stable when it comes to learning. After many experiments, I figured out that the cause of this issue is an unstable algorithm called Spatial Pooler. This algorithm is a part of HTM CLA and it is responsible for learning spatial patterns. It learns extremely quickly (1-3 cycles), but sometimes forgets. Imagine what happens when your brain starts forgetting things?!

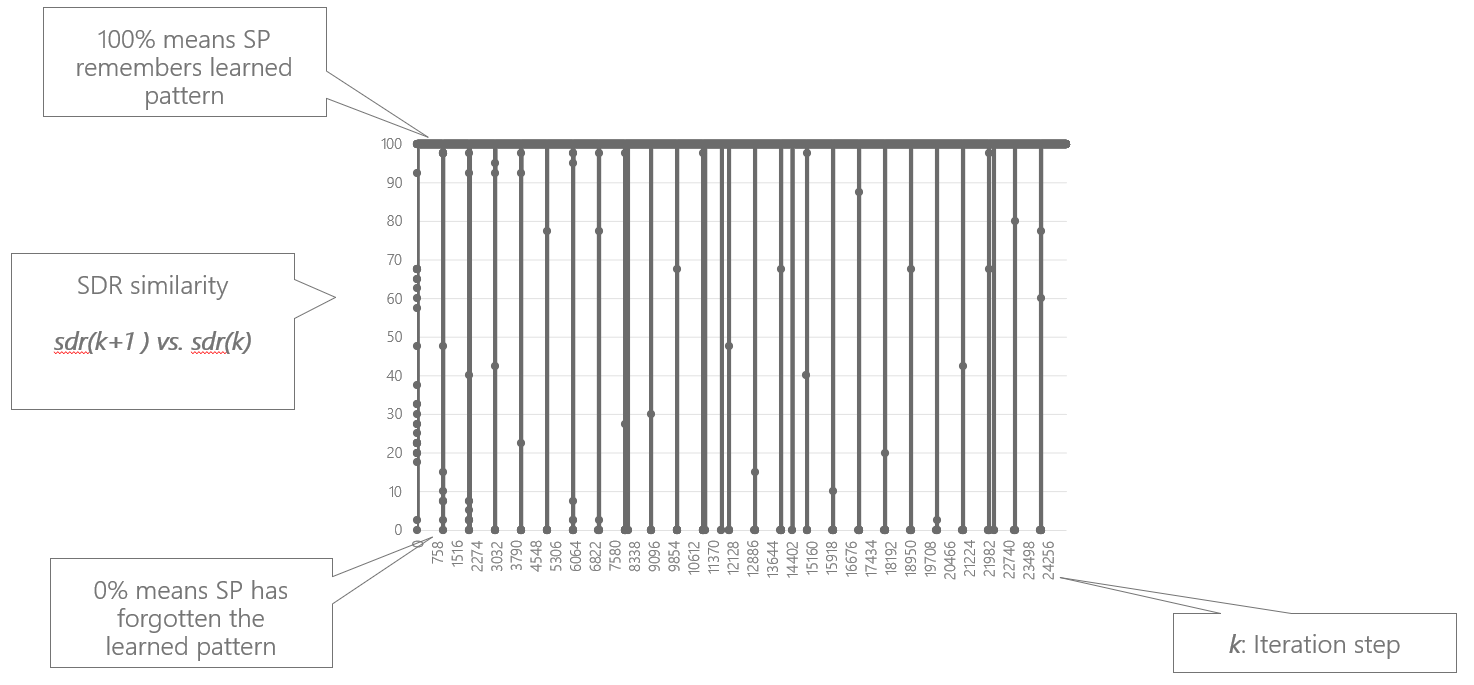

One of the great examples of the HTM approach is my last paper. I found that the forgetting happens independent from boosting, that is another sub-algorithm inside of the Spatial Pooler. Following diagram shows moments of instability.

Every vertical line is the moment when the Spatial Pooler forgets the pattern. The horizontal line on top is the period of the memorized pattern. This happens when boosting gets active. So, why not to switch-off the boosting? We cannot do that, because boosting is the implementation of the biological process. If we exclude the boosting then we will end up with some new math tweaks that have a purpose to fix some missing thing. This is allowed, but I do not want to follow the common approach that in the best case might help me to create just another ML algorithm with some better benchmark. No, I want to create it how the brain does it.

To solve this piece of the puzzle I have been investigating the effect in the brain-biology called "Homeostatic Plasticity Mechanism". This mechanism is active in the brain throughout the entire life. All neural circuits change their connectivity. This mechanism guarantees the functional stability of the brain and cognitive functions.

It keeps the excitation and inhibition of cells in balance. So, it is something important. It guarantees the overall cortical network stability.

I found that this mechanism is changing in a period of new-born mammals (and probably humans). It means it is switched off in some parts of the neocortex where Spatial Pooler is supposed to be. So, I came to the idea, to implement the same mechanism in the HTM CLA. I designed a component Homeostatic Plasticity Controller (HPC) that supervises the Spatial Pooler. When created, the HPC puts the Spatial Pooler in the "baby-state". Inspired by finding in neurosciences, I called this new-born stage. In this stage, the HTM modelled tissue acts briefly as a baby. It boasts all its neural cells on every input. It learns and forgets. But it makes sure that all neurons get uniformly activated and adopted to seen patterns.

This most likely shows that we as babies learn and forget, but our brain is adapting to seen patterns. So adoption will be different in a different context (language, place, culture,..) etc.

I believe that the highly active boosting might be a reason why we cannot remember anything in our real-life baby-stage.

In this paper, some results also show that uncontrolled boosting can also cause the brain to behave epileptic, or epilepsy causes boosting or booth. We don't know yet.

Finally, after the new-born stage (baby time) the HPC disables boosting and the Spatial Pooler starts learning, but it does not forget anything, anymore (there are still some exceptions in the investigation).

Recap

This publication was the next paper in a series related to my research. By following the true HTM approach, I investigated the biological mechanism and applied it to the HTM model. It worked!

Moreover, it helps me to better understand an important process in the brain. In my opinion, HTM is a great try and a promising approach to understand and implement true intelligence.

As a part of this research, I also created the neocortexapi and dotnetactors open source projects, which utilizes the power of modern programming language C#, cross-platform .NET Core framework and Microsoft Azure. Stay tuned.

Want to read more about this research?

Read more about Hierarchical Temporal Memory

Please find the full award winning Paper here: https://www.insticc.org/node/TechnicalProgram/icpram/2021/presentationDetails/103142

Download from neocortexapi

https://github.com/ddobric/neocortexapi/blob/master/NeoCortexApi/Documentation/experiments.md#improved-spatial-pooler-with-homeostatic-plasticity-controller

More about Spatial Pooler algorithm.

Implementation of HTM in C# .NET Core

References: https://github.com/ddobric/neocortexapi/blob/master/README.md

Award certificate