ML.NET is a great approach to democratize Machine Learning technology. But, if you want to publish your ASP.NET Core application, which holds a dependency to ML.NET code, you have to know few limitations.

Usually, tooling around .NET Core and ASP.NET Core is so powerful, that you don;t have to think about anything (more or less).It is simply easy to bring your application to Azure.

Unfortunately, your ML.NET application will not run that easy way.

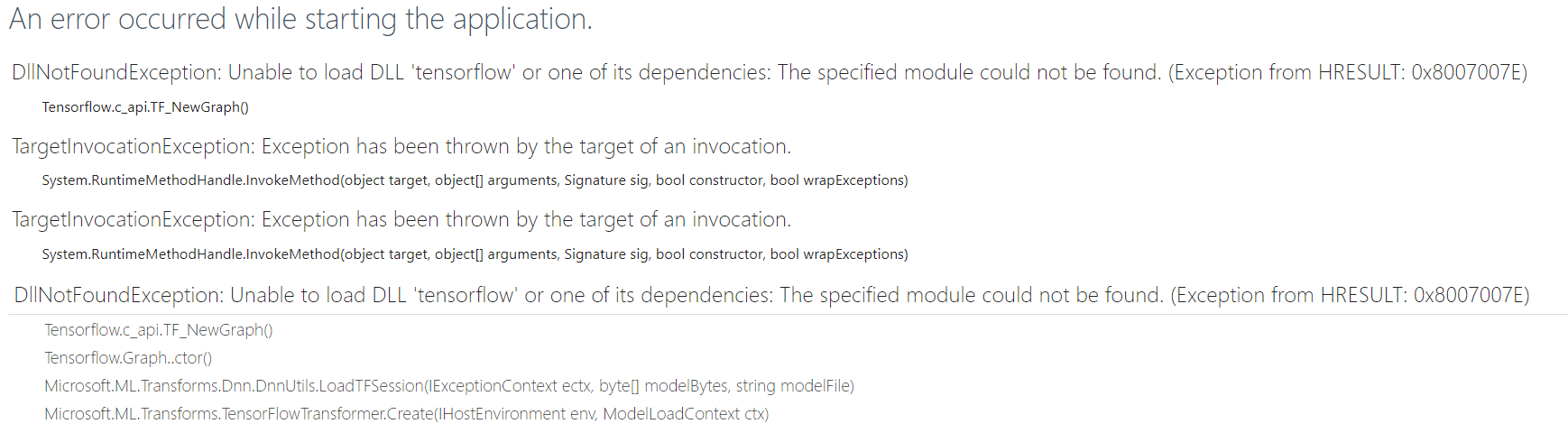

Here is one example, what happen when you deploy your ML.NET application.

System.Reflection.TargetInvocationException: Exception has been thrown by the target of an invocation. ---> System.DllNotFoundException: Unable to load DLL 'tensorflow' or one of its dependencies

When you navigate to your application you will see something like this:

This issue or better to say question to related undocumented requirement is filed here.

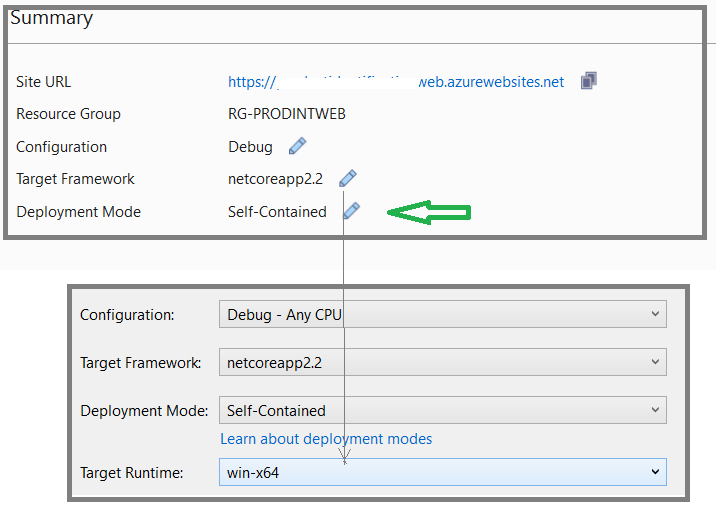

To make this working you first must to build self-contained application. Probably not required but it is a good practice for ASP.NET Core applications.Additionally, application has to be build for x64 architecture. This is required for ML.NET.

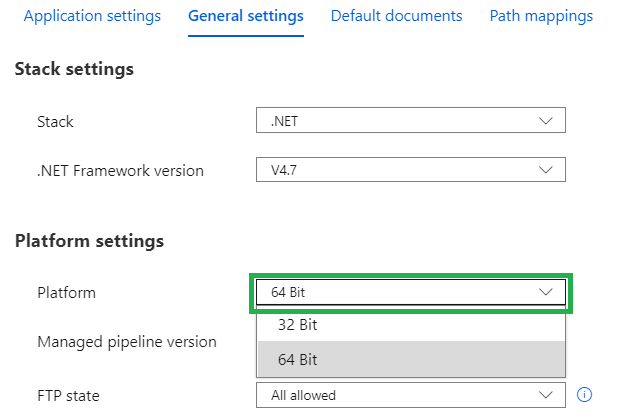

But this is not all. AppService container is typically by default set to x32 architecture. You can do this in Azure portal as shown at following picture:

Recap

To host ML.NET ASP.NET Core application in AppService, you need to do following:

- Build your self contained Asp.NET Core application for x64 architecture.

- Configure your AppService to use container with x64 architecture.

Hope this helps.

Damir :)